The EU Artificial Intelligence Act is a revolutionary regulation introduced by the European Union on August 1, 2024, to establish a unified legal framework for the development, deployment, and use of artificial intelligence systems.

In the past couple of years, we have seen AI solutions being heavily implemented in all businesses. The financial sector gains significant advantages thanks to automation and analytic power that comes with artificial intelligence, but under new regulations many of these systems may become limited or fall under heavy governance.

How exactly will AI Act impact banks and fintechs? In this article, we will try and answer that question, but before that, we have to include a bit more details about the AI Act to better understand how it works.

What is AI-Act?

Description

The EU AI Act is a legal regulation that governs the lifecycle of artificial intelligence systems within the European Union. It introduces rules for the development, placing on the market, and use of AI on a risk-based approach. The Act defines responsibilities for providers, deployers, importers and distributors of AI systems if their outputs are used in the EU. It aims to ensure that AI technologies are safe, transparent, and respect fundamental rights.

Definition of AI System

The AI Act defines an AI system as follows:

“AI system’ means a machine-based system that is designed to operate with varying levels of autonomy and that may exhibit adaptiveness after deployment, and that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments.”

Please note that it does not directly define if certain solutions are or are not AI systems, and the additional guidelines are supposed to help in defining that:

“The definition of an AI system encompasses a wide spectrum of systems. The determination of whether a software system is an AI system should be based on the specific architecture and functionality of a given system and should take into consideration the seven elements of the definition laid down in Article 3(1) AI Act.”

“No automatic determination or exhaustive lists of systems that either fall within or outside the definition of an AI system are possible.”

As you can see, it’s not easy to confirm if something is definitely an AI system, and we may yet expect amendments to the act.

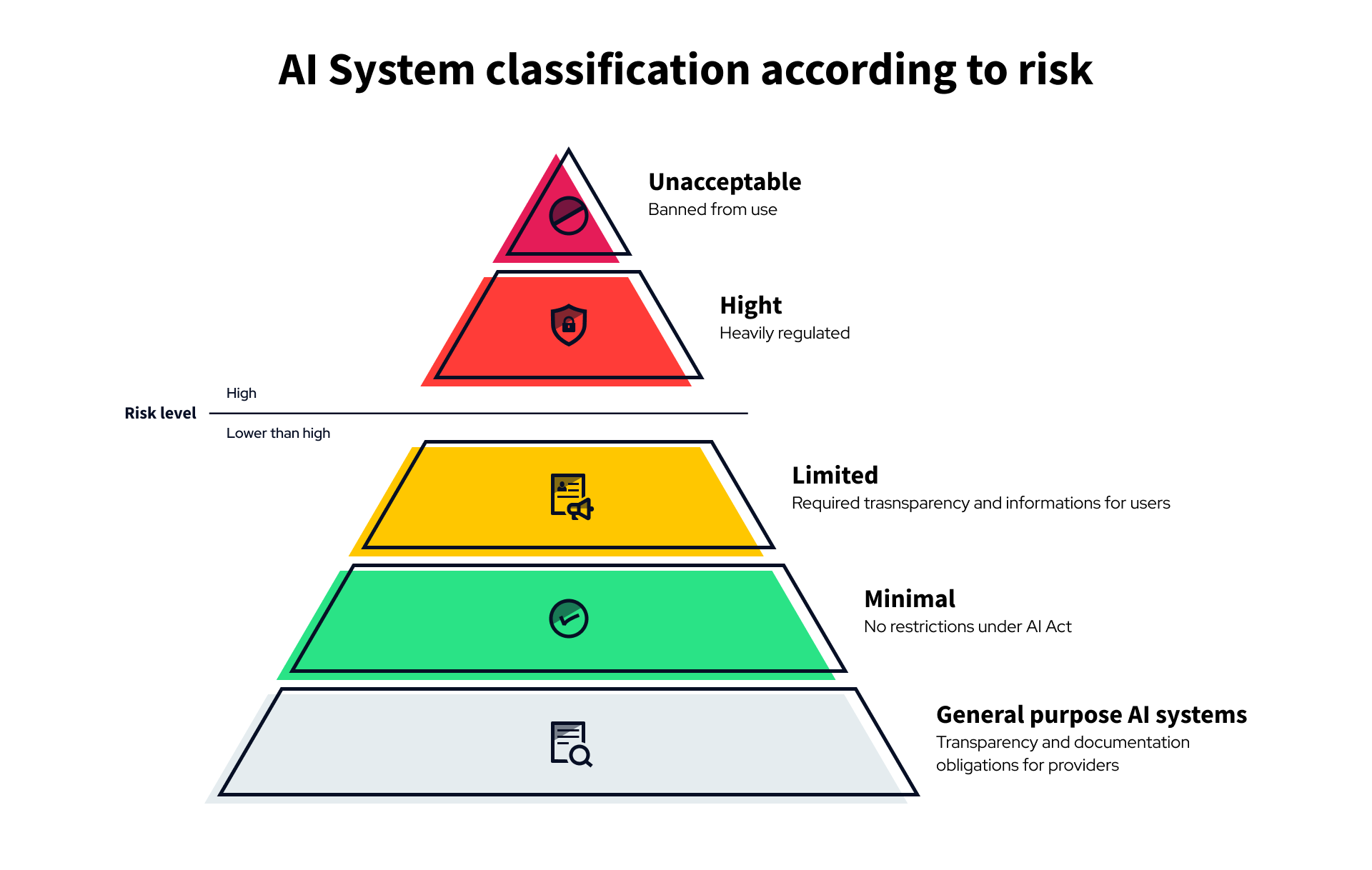

Classification according to risk

The EU AI Act introduces a structured, risk-based classification system for artificial intelligence, which determines the level of regulatory obligations depending on the potential impact of each AI application.

Unacceptable risk

At the top of this hierarchy are AI systems categorized as posing an unacceptable risk. These include systems that exploit human vulnerabilities, manipulate behaviour through subliminal techniques, enable social scoring by authorities, or use real-time biometric identification by law enforcement in public spaces. Such systems are considered incompatible with EU values and are therefore banned.

High risk

The next level includes high-risk AI systems, which operate in sensitive areas such as critical infrastructure, healthcare, education, employment, public administration, and law enforcement. These systems are not prohibited but must comply with strict requirements, including risk management procedures, data governance, documentation, transparency, and human oversight. For instance, AI used to assess job applicants or support judicial decisions would fall into this category.

Risk lower than high:

Below the high-risk level are systems with lower risk, divided into three subcategories.

- Limited risk

Limited-risk AI systems, such as chatbots or AI tools that simulate human interaction, must inform users that they are engaging with an AI system.

- Minimal risk

Minimal-risk systems, including AI used for spam filtering or inventory management, do not face specific legal obligations under the Act, though best practices are encouraged.

- General-purpose AI systems

Finally, General-purpose AI systems – such as large foundational models – are evaluated based on their capabilities and potential systemic impact. These models must meet transparency and documentation obligations, and in cases where they pose significant influence, they may be treated similarly to high-risk systems.

General responsibilities in implementation

The EU AI Act defines the roles and responsibilities of various sides involved in the AI system lifecycle to ensure accountability and compliance across the entire supply chain.

Provider

The provider is the entity that develops or markets an AI system under its name or trademark. Providers bear the most extensive obligations, particularly for high-risk and general-purpose AI systems. They are responsible for conducting risk assessments, ensuring conformity through technical documentation, registering high-risk systems, and maintaining compliance throughout the system’s lifecycle, including after it enters the market.

Deployers

Entities that “use” AI systems – referred to as deployers – are also subject to specific responsibilities, especially when using high-risk systems. They must operate the systems according to the provided instructions, implement appropriate human oversight, and ensure that the AI’s output is interpreted correctly. In certain cases, such as systems that interact directly with individuals or involve biometric recognition, users are required to inform affected persons that they are interacting with AI or being subject to its analysis.

Importer and distributor

Importers and distributors also play a crucial role in maintaining compliance. Importers must verify that systems brought into the EU market meet all regulatory requirements, including proper documentation and CE marking for high-risk systems. Distributors, on the other hand, must ensure that the products they supply are properly labelled and accompanied by required documentation. If either party becomes aware of non-compliance, they are obliged to take corrective actions and inform relevant authorities. This coordinated approach ensures that responsibility for AI safety and legality is shared across the market.

Supervision and penalties

The EU AI Act establishes a system of supervision and enforcement to ensure that all stakeholders comply with its provisions. National supervisory authorities will be designated by each EU member state to oversee implementation within their jurisdictions. These authorities will have the power to investigate AI systems, request technical documentation, conduct audits, and mandate corrective measures when necessary. In cases of serious non-compliance, such as placing prohibited systems on the market or violating obligations for high-risk systems, authorities can impose significant administrative penalties.

The penalties under the Act are designed to be proportionate but dissuasive. The most severe violations, such as deploying banned AI systems, may result in fines of up to €35 million or 7% of the company’s global annual turnover*. Other breaches, including failure to comply with high-risk system requirements, may lead to fines of up to €15 million or 3% of turnover*. Providing false, incomplete, or misleading information to authorities can incur penalties of up to €7.5 million or 1% of global turnover*.

*Whichever is higher.

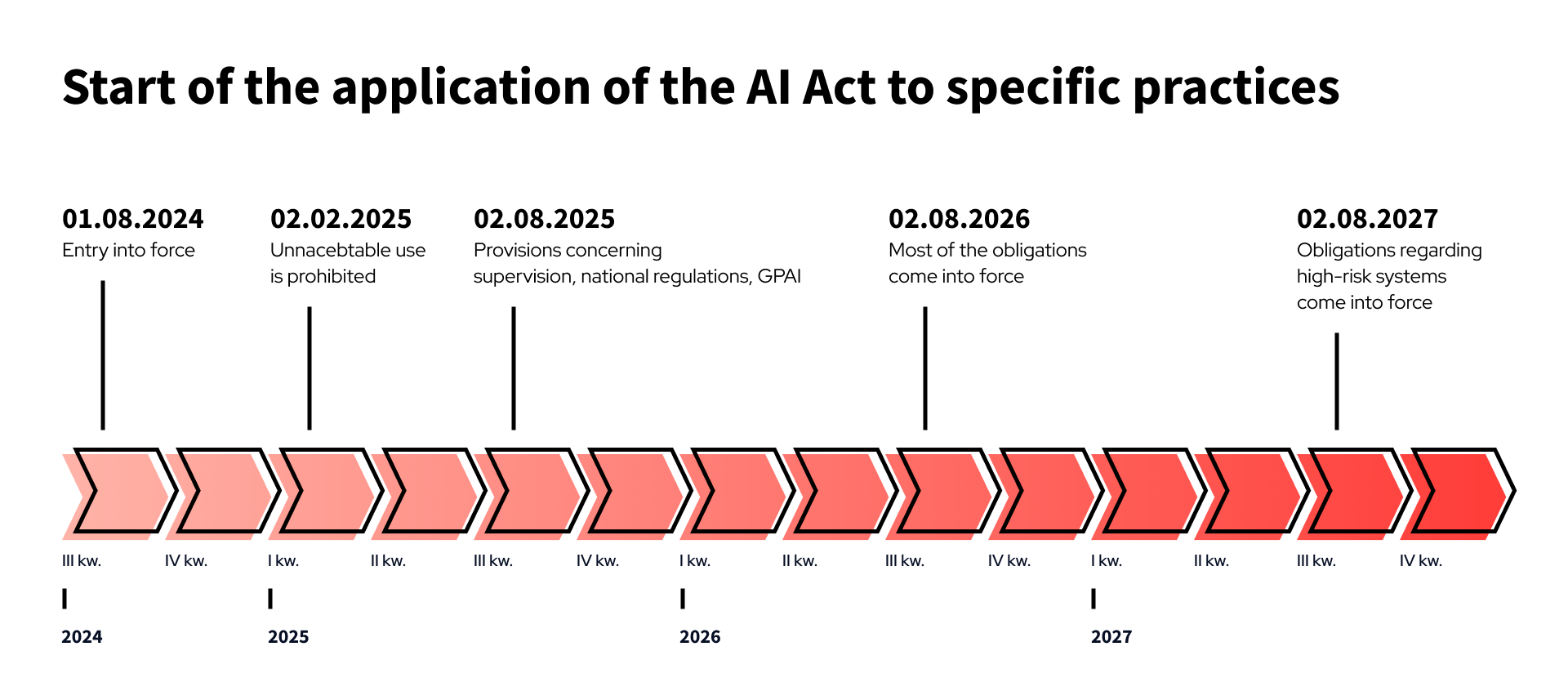

Important timeframes

What does it mean for banks and fintechs?

The AI Act will have a significant impact on banks and fintech companies, given their reliance on advanced algorithms and automated decision-making in credit scoring, fraud detection, customer onboarding, and risk assessment. Many of these applications may fall under the high-risk category, especially when they influence access to financial services or involve biometric identification and profiling. As a result, financial institutions will need to implement risk management frameworks, maintain detailed technical documentation, and ensure transparency in how AI-driven decisions are made and communicated to customers.

Banks and fintechs using general-purpose AI models integrated into their services will also be subject to new transparency and information-sharing obligations. They will need to ensure they understand the capabilities and limitations of the models they deploy, particularly if sourced from external providers. Additionally, these institutions must designate trained personnel to oversee AI systems and ensure compliance with legal requirements throughout the system’s use. For smaller fintechs, this may require scaling up internal expertise and adjusting operational practices. Overall, the Act introduces both challenges and opportunities for institutions to strengthen trust, improve data governance, security, and differentiate themselves through responsible AI use.

Conclusion

The introduction of the EU AI Act marks a decisive step toward more responsible and transparent use of artificial intelligence across all sectors, with particularly strong implications for the financial industry. Banks and fintech companies, which have adopted AI to drive efficiency and customer experience, must now adapt to a more structured and legally binding framework.

While many aspects of the regulations are still unclear and will require either clarification in the Act or interpretation of national governance bodies in agreement with the EU, it’s still a step in the right direction. We hope that the AI Act will set a new standard for how artificial intelligence should be used: not just effectively, but responsibly.

Sources: